In this project, we observe distributions of a read world data. The data comprises of Stock prices of "Google" from year 2014-2018. We will compare normal and t-distributions to fit the observed values. Finally, we will try Mixture of Gaussians to fit the data and note scope and limitations.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inlinedata = pd.read_csv('stock_prices.csv', parse_dates=True)goog = data[data['Name'] == 'GOOG'].copy()

goog.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| date | open | high | low | close | volume | Name | |

|---|---|---|---|---|---|---|---|

| 251567 | 2014-03-27 | 568.000 | 568.00 | 552.92 | 558.46 | 13052 | GOOG |

| 251568 | 2014-03-28 | 561.200 | 566.43 | 558.67 | 559.99 | 41003 | GOOG |

| 251569 | 2014-03-31 | 566.890 | 567.00 | 556.93 | 556.97 | 10772 | GOOG |

| 251570 | 2014-04-01 | 558.710 | 568.45 | 558.71 | 567.16 | 7932 | GOOG |

| 251571 | 2014-04-02 | 565.106 | 604.83 | 562.19 | 567.00 | 146697 | GOOG |

goog.set_index('date')['close'].plot()<matplotlib.axes._subplots.AxesSubplot at 0x7fc579892b70>

Since returns are normalized to 0-1, we will be working with returns instead of prices

goog['prev_close'] = goog['close'].shift(1)

goog['return'] = goog['close'] / goog['prev_close'] - 1

goog['return'].hist(bins=100);goog['return'].mean()0.000744587445980615

goog['return'].std()0.014068710504926713

Normal Distribution

from scipy.stats import normx_list = np.linspace(goog['return'].min(),goog['return'].max(),100)

y_list = norm.pdf(x_list, loc=goog['return'].mean(), scale=goog['return'].std())plt.plot(x_list, y_list);

goog['return'].hist(bins=100, density=True);We note that the distribution doesnot quite fit the gaussian distribution due to higher kurtosis.

t-Distribution

from scipy.stats import t as tdistparams = tdist.fit(goog['return'].dropna())params(3.4870263950708473, 0.000824161133877212, 0.009156583689241837)

df, loc, scale = paramsy_list = tdist.pdf(x_list, df, loc, scale)plt.plot(x_list, y_list);

goog['return'].hist(bins=100, density=True);The t-distribution fits the data quite well. However we have one more param that we deal with.

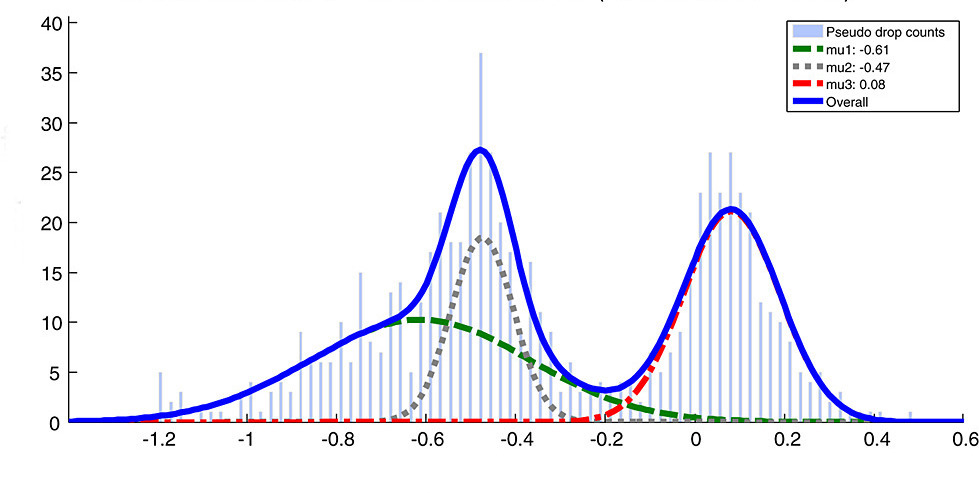

Mixture of Gaussians

from sklearn.mixture import GaussianMixture

data = np.array(goog['return'].dropna()).reshape(-1, 1)

model = GaussianMixture(n_components=2)

model.fit(data)

weights = model.weights_

means = model.means_

cov = model.covariances_

print("weights:", weights)

print("means:", means)

print("variances:", cov)weights: [0.29533565 0.70466435]

means: [[-0.00027777]

[ 0.00117307]]

variances: [[[5.05105403e-04]]

[[6.96951828e-05]]]

means = means.flatten()

var = cov.flatten()x_list = np.linspace(data.min(), data.max(), 100)

fx0 = norm.pdf(x_list, means[0], np.sqrt(var[0]))

fx1 = norm.pdf(x_list, means[1], np.sqrt(var[1]))

fx = weights[0] * fx0 + weights[1] * fx1goog['return'].hist(bins=100, density=True)

plt.plot(x_list, fx, label='mixture model')

plt.legend();We note the performance of GMM with n_components=2. The fit is similar to the t-distibution. The number of params is still 3 since GMM with n_component=2 has 2*DOF-1 where DOF is the degree of freedom of each gaussian.

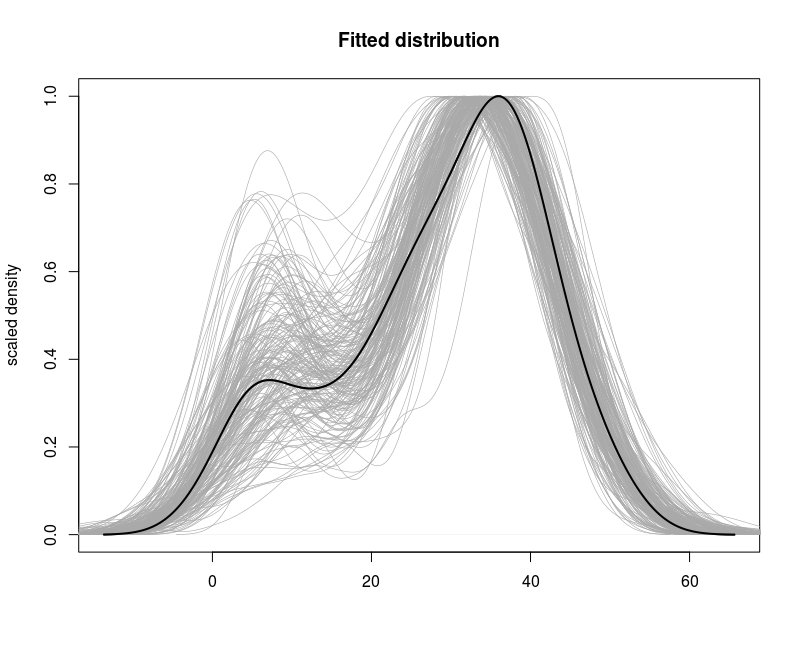

We note that GMMs are universal approximatiors. Hence, we can better fit the distribution with more gaussian in the mixture. We try it with 10 components.

num_components = 10

model = GaussianMixture(n_components=num_components)

model.fit(data)

weights = model.weights_

means = model.means_

cov = model.covariances_

means = means.flatten()

var = cov.flatten()

fx = 0

x_list = np.linspace(data.min(), data.max(), 100)

for i in range(num_components):

fx += weights[i] * norm.pdf(x_list, means[i], np.sqrt(var[i]))goog['return'].hist(bins=100, density=True)

plt.plot(x_list, fx, label='mixture model')

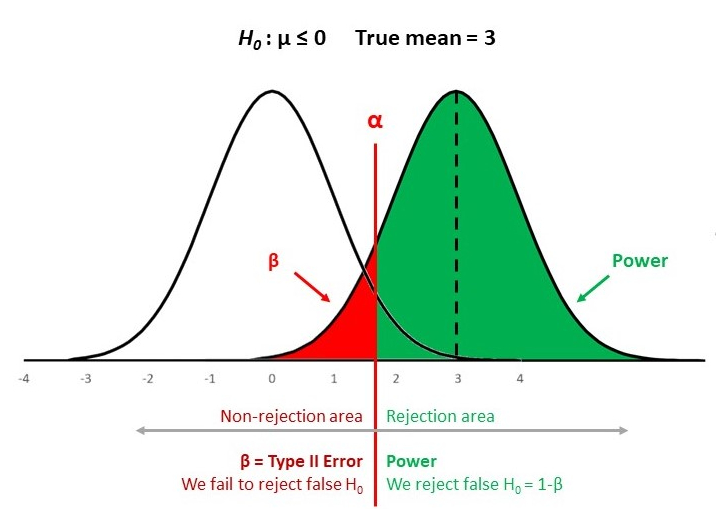

plt.legend();We note the fit is much better with n_components = 10. However, this comes at the cost of 16 additional params. Also, we're in the range of overfitting the data. Finally, there doesnt seem to exist simple Hypothesis testing routine for mixture of gaussians. The best I've seen are Bayes factor methods on the posterior distribution. I plan to discuss Bayesian methods for hypothesis testing on my next project.