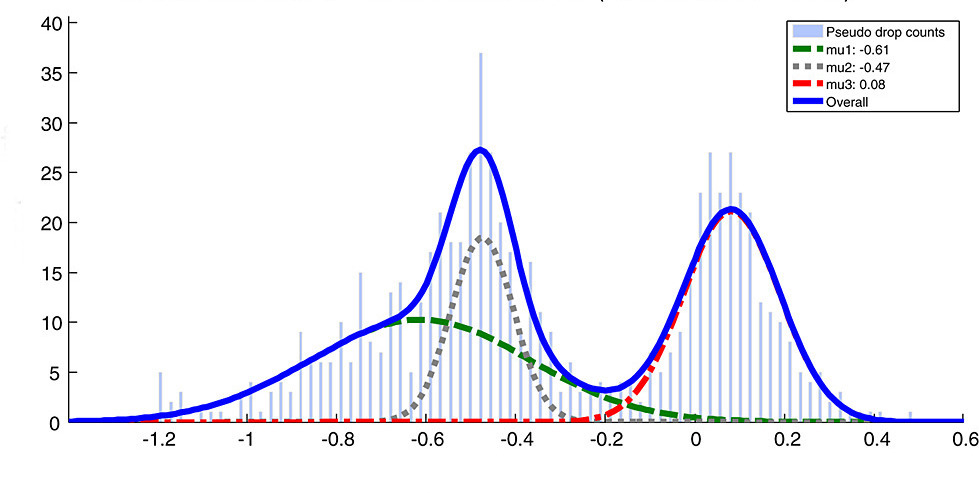

Gaussian Mixture Models

In this project we explore the Gaussian Mixture models. GMMs are universal approximators. This means that any probability density can be approximated to arbitrary precision using mixture of gaussian densities. We saw a glimpse of it in our Hypothesis Testing II project. GMMs are go-to models for unsupervised learning schemes and one of my favorite ML models. In fact, they utilize (usually) EM algorithm, which is an iterative method of estimating statistical parameters similar to and I belive, on par with, backpropagations in Neural nets. In this...